AI Security: Artificial Intelligence in Cyber Security

Protection mechanisms for the digital future

Cybercrime is not only becoming more frequent, but it is also increasing in complexity – at the same time, the integration of artificial intelligence (AI) has become indispensable for companies. Therefore, modern IT security solutions also need to be geared specifically to protect companies from AI-assisted cyber attacks.

Here you will find out what AI security means and how to safely navigate your way through tomorrow's AI!

What is AI Security?

In the race to leverage the benefits of using AI systems, the security of these systems is becoming increasingly important. Companies and governments must ensure that they develop robust AI security strategies, in order to prevent malicious AI-powered attacks and, at the same time, to establish their own AI-assisted defense measures. On the other hand, the AI systems themselves must be safeguarded through the use of special technologies, tools and frameworks and protected against potential attacks.

Key aspects of a secure AI are tamper protection, data security and system integrity. For this reason, the German Federal Office for Information Security (BSI) recommends that cyber security be given top priority. According to the BSI, it will be essential to increase the speed and scope of defense measures. This includes building a resilient IT infrastructure, improving attack detection, strengthening the prevention of social engineering (e.g., awareness training, multi-factor authentication, zero trust architecture) as well as using the general benefits of AI for defense purposes (e.g., threat and vulnerability detection).

Safeguarding Against AI Attacks

Protecting AI-Assisted Products Against AI Attacks

AI poses considerable risks for IT security because it not only creates potential vulnerabilities in existing systems, but can itself also be the target of and a tool for attacks. The risk of cyber threats is particularly high for the public sector and critical infrastructures. To protect products with AI against attacks (also by other AIs), companies should implement multiple strategic measures. The topic of data protection in particular is becoming increasingly relevant. As AI analyzes large volumes of data, there is a risk that sensitive information could be compromised. Companies therefore need to ensure that they comply with data privacy regulations and take appropriate security precautions in order to prevent data misuse.

Averting complex social engineering attacks

Their aim is to acquire confidential information or obtain access by manipulating human behavior. Companies can significantly reduce the risk of such attacks by training employees and raising their awareness, by introducing strict security guidelines for access rights, password guidelines and security protocols, by using anomaly detection and by implementing clear-cut response mechanisms.

Detecting and averting prompt injection and manipulation

A key issue is the risk of prompt injections. In cases of prompt injection, manipulated inputs (prompts) cause an AI, particularly a language model, to unknowingly implement the intentions of the attacker. Prompt injections are especially relevant for AI-based applications such as chatbots, AI agents or language models that react to user input. The results of a successful prompt injection attack can vary greatly. They range from acquiring sensitive information through to influencing critical decision-making processes under the guise of normal operation. Protective measures include strict prompt validation and filter mechanisms that prevent non-secure or manipulated prompts from being processed. Regular tests and the monitoring of model outputs also help detect such attacks early on.

IT security for AI agents

As AI agents increasingly react autonomously, malfunctions or misinterpretation during the data analysis can lead to incorrect security measures. For example, a legitimate user could be wrongly refused access or an attack could be overlooked. Through a combination of technical robustness, human monitoring and continuous improvement (audits, updates and patches), companies can ensure that AI agents function reliably and potential risks are thus minimized.

Use of monitoring and anomaly detection systems

Similar to how anomalies can be detected with so-called machine learning (ML for short), cybercriminals can use AI-assisted systems to scan networks and systematically search for vulnerabilities via which disguised malware can be introduced and attacks on (other AI) systems carried out. AI-assisted systems for anomaly detection can help detect suspicious activities or unexpected behavior within the AI application or infrastructure. They identify unusual patterns, which could indicate a potential attack, and boost the capability to respond to possible attacks.

Protecting models against data manipulation

AI models that are trained using sensitive or critical data must be protected against data poisoning attacks. In such attacks, data manipulated during training is injected in order to impair the performance of the model. To ensure the integrity of the model, the training data must be checked regularly and its integrity monitored. Furthermore, only trustworthy data sources may be used.

Adversarial robustness and testing of models

Attackers can use so-called adversarial attacks in which slight changes to the input data mislead AI models. To avert such attacks, models should be tested with regard to their robustness against adversarial examples. Various tools can help harden models by running simulated attacks and revealing vulnerabilities.

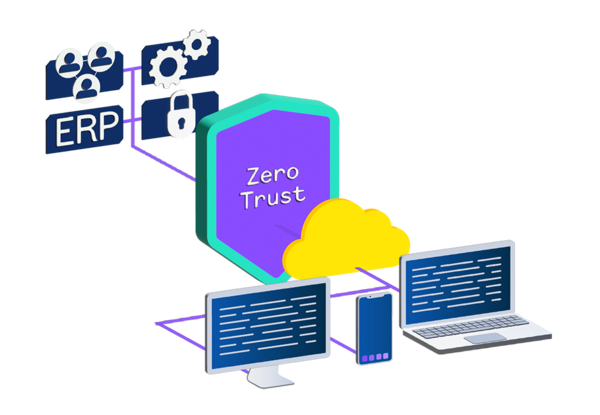

Security architectures and zero trust

Zero trust architectures can help control access to AI models and their training data. With zero trust, every request for access to data or applications is checked and validated so that only authorized systems or users are granted access. This makes it difficult for attackers to penetrate the training or production environment of AI models.

White paper

Securely Integrating Generative AI

The main strengths of AI (artificial intelligence) lie in its ability to analyze large amounts of data, adapt through learning processes, and solve complex problems. It is increasingly making decisions independently and implementing them. However, the increased use of AI in more and more areas means that AI security is becoming increasingly important. Traditional IT security architectures are often based on static rules that ward off known threats. However, these systems are often unable to adapt to the dynamic processes surrounding AI.

In the white paper “Controlled Intelligence,” we present an architectural proposal for the secure local operation of generative AI models in a zero-trust network with active traffic monitoring and multi-layered perimeter security using solutions from genua.

White paper

Paths for the Secure Use of Generative AI in Business

Solutions from genua for Protecting Your IT Infrastructure

Reliable Protection for Networks, Data and Critical IT Infrastructures

The fact is that attacks aided by AI systems are now also commonplace. genua offers a series of specialized IT security applications and solutions which you can use to protect yourself and your IT infrastructure against AI attacks. These solutions are designed for high-security environments and focus particularly on the protection of networks, data and critical IT infrastructures.

Safeguarding with AI

How is AI Used as a Tool in Cyber Security?

Many modern IT security products use artificial intelligence to detect and neutralize threats early on. They include tools for anomaly detection and authentication services. In these systems, machine learning (referred to below as ML) plays a central role in analyzing patterns and detecting atypical behavior so that potential threats can be identified before they cause damage. AI can thus provide consistent and long-term protection for IT systems.

What is Machine Learning?

By training algorithms using historical data, an AI can use ML to learn what is considered as normal behavior. AI is then able to detect data patterns that deviate from this normal behavior. In the cyber security recommendation from the BSI (BSI-CS 134), anomaly detection is given particular attention as a means for protecting networks. By analyzing (behavior) patterns, unusual activities and threats such as cyber attacks can be detected early on, data leaks revealed or system failures prevented. As part of network monitoring, abnormal data traffic or unusual user activities, for example, can be identified or malicious code detected by means of anti-virus software.

Unlike conventional systems, which are based on signature-based approaches, ML algorithms learn continuously and can detect anomalies in real time. This then allows new threats (e.g., zero-day exploits) to be averted.

The sorting and prioritization of the warning messages continues to be a key factor. This task is particularly prone to misclassification. To do this, ML models process extremely large volumes of data (scalability) and detect complex correlations which are not immediately obvious to human analysts.

What are the Advantages of Machine Learning?

Through continuous learning and optimization, the ML models improve constantly, which reduces the number of false positives. ML models can adapt to changing data patterns without the need for manual updating and remain effective even in the case of evolving threat landscapes or new behavior patterns. Furthermore, AI can use existing data to predict future threats and initiate protective measures early on, which reduces the workload on IT departments considerably.

A further major advantage is the ability of AI to automate processes. Here it is not just a matter of detecting attacks in real time, but also reacting to them. So-called AI agents, i.e., autonomous, independently acting AIs, enable the automatic detection and elimination of threats. Routine tasks such as patch management and incident response can be automated.

Automation and Intelligent Monitoring

Zero trust architectures play a key role in IT security, but require a high level of monitoring and data processing. Through automation and intelligent monitoring, AI can help detect potential threats in real time and manage access dynamically. AI agents can, for example, analyze the behavior of users and devices. If the AI detects unusual access times or suspicious volumes of data, it can react in real time by automatically restricting or blocking access, requesting additional authentication measures, placing affected systems into quarantine or conducting an in-depth analysis. Moreover, they can initiate countermeasures autonomously and restore, e.g., corrupted or infected systems to a secure condition without human intervention.

If AI is integrated into authentication and access control systems, known vulnerabilities such as purely password-based solutions can be overcome. This improves the accuracy and reliability of authentication and increases the security of the system.

The targeted implementation of AI can help companies save time and resources, drastically increase the speed at which companies respond to threats and, at the same time, reduce the risk of human error.

What is Retrieval-Augmented Generation?

Retrieval-augmented generation (RAG) is one of the most important applications of AI. RAG is an enhanced AI system which can extract information from various data sources and allow this information to be used in the generation of responses. It not only uses the training data, but if required also accesses various other sources, e.g., databases of an organization, in order to improve the responses or to close knowledge gaps. In this way, RAG extends the already powerful functions of large language models (LLMs) without the model having to be retrained.

Example: A user asks about a specific technical detail in a manual. The RAG system then compiles content-related text segments from its document database. This data together with the user's question is forwarded to the AI model, which uses the data to generate an informed response. In this way, even complex questions can be answered with tailored responses and the knowledge expanded dynamically. Hallucinations, i.e., responses invented by the model itself, are effectively reduced.

![[Translate to English:] [Translate to English:]](/fileadmin/_processed_/7/e/csm_genugate-visual_1650335503.png)

![[Translate to English:] [Translate to English:]](/fileadmin/_processed_/f/e/csm_genuscreen-visual_17047e68b4.png)